Introduction: From Generic to Genius – Tailoring ChatGPT for Your Brand and Operations

In today’s fast-paced digital landscape, artificial intelligence, particularly large language models (LLMs) like ChatGPT, has moved from being a niche technology to a cornerstone of modern business operations. While off-the-shelf ChatGPT is remarkably powerful, its generic nature means it speaks a universal language, not necessarily your brand’s unique voice or your company’s specific jargon. Imagine a customer support bot that consistently misunderstands your product names, a content generator that produces bland copy, or an internal knowledge assistant that struggles with your operational protocols. This is where customization becomes not just an advantage, but a necessity.

Training ChatGPT for your brand and operations transforms it from a generic AI assistant into a specialized, high-performing ally. It allows the model to understand your product catalog intimately, adhere to your brand’s style guide, handle customer queries with contextual nuance, and streamline internal processes by speaking your company’s language. This guide will walk you through the comprehensive process of molding ChatGPT into an invaluable, brand-specific tool, elevating your operational efficiency and customer engagement to genius levels.

Step 1: Define Your Use Cases and Goals

Before you dive into data collection and model fine-tuning, you must clearly articulate what you want your tailored ChatGPT to achieve. This foundational step dictates every subsequent decision.

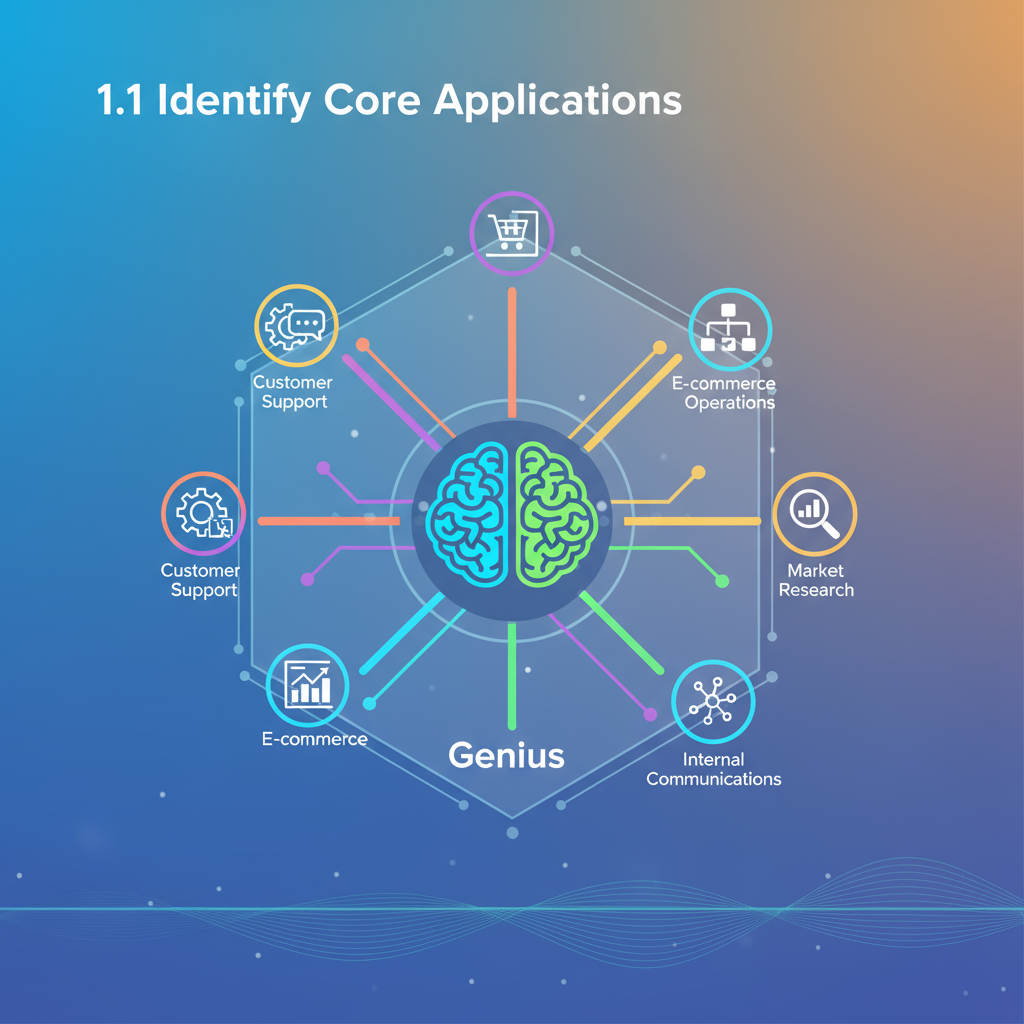

1.1 Identify Core Applications

Brainstorm specific areas where an AI assistant could add significant value. Examples include:

- Customer Support: Answering FAQs, troubleshooting common issues, guiding users through product setup.

- Content Generation: Drafting marketing copy, social media posts, blog outlines, email newsletters, product descriptions.

- Internal Knowledge Base: Assisting employees with HR policies, IT troubleshooting, operational procedures, project summaries.

- Sales & Marketing: Generating personalized outreach messages, summarizing customer profiles, creating lead qualification questions.

- Product Development: Summarizing user feedback, generating feature ideas, documenting specifications.

1.2 Set Measurable Objectives

Quantify what success looks like. This will help you track progress and justify your investment.

- Increase customer satisfaction (CSAT) by X%.

- Reduce average response time by Y minutes.

- Decrease internal knowledge search time by Z%.

- Generate A marketing slogans per week.

- Improve conversion rate by B%.

1.3 Understand Limitations and Ethical Considerations

No AI is perfect. Be aware of potential biases, inaccuracies, and ethical implications. Define boundaries for what the AI should and should not do or say.

Step 2: Curate and Prepare Your Brand-Specific Data

The quality and relevance of your training data are paramount. This data will be the foundation of your customized AI’s knowledge.

2.1 Gather Diverse Data Sources

Collect all relevant text-based information from your organization:

- Website Content: Product pages, FAQs, blog posts, company history, mission statement.

- Customer Support Logs: Transcripts of chat conversations, email exchanges, support tickets (anonymize sensitive data).

- Internal Documentation: Employee handbooks, operational manuals, IT guides, project documents, meeting minutes.

- Marketing Materials: Brand style guides, ad copy, press releases, social media posts.

- Product Specifications: User manuals, technical documents, feature lists.

2.2 Clean and Preprocess Data

Raw data is rarely ready for AI training. This step involves making it usable.

- Remove Redundancy: Eliminate duplicate information.

- Correct Errors: Fix typos, grammatical mistakes, and factual inaccuracies.

- Standardize Formatting: Ensure consistent use of headings, bullet points, and terminology.

- Anonymize Sensitive Information: Crucial for customer data, employee records, and confidential business intelligence. Use tools or scripts to replace names, addresses, account numbers, etc., with placeholders (e.g., `[CUSTOMER_NAME]`, `[ACCOUNT_NUMBER]`).

- Filter Irrelevant Content: Remove internal discussions, outdated policies, or anything outside your defined use cases.

import re

def clean_text_data(text):

text = text.lower() # Convert to lowercase for consistency

text = re.sub(r'http\S+|www\S+|\S+\.com\S+', '', text, flags=re.MULTILINE) # Remove URLs

text = re.sub(r'\s+', ' ', text).strip() # Remove extra whitespace

text = re.sub(r'\.{2,}', '.', text) # Replace multiple dots with a single one

text = re.sub(r'\d{3}[-.\s]\d{3}[-.\s]\d{4}', '[PHONE_NUMBER]', text) # Anonymize phone numbers

text = re.sub(r'\S+@\S+', '[EMAIL_ADDRESS]', text) # Anonymize email addresses

# Add more specific cleaning/anonymization rules as needed

return text

# Example usage:

raw_support_log = "Customer reported issue #456. Email: john.doe@example.com. Call was at 10:30 AM. My product is broken! Visit us at www.mycompany.com."

cleaned_log = clean_text_data(raw_support_log)

print(cleaned_log)

# Output: customer reported issue #456. email: [EMAIL_ADDRESS]. call was at 10:30 am. my product is broken! visit us at [URL].

Step 3: Choose Your Customization Approach

Different levels of customization offer varying degrees of specificity and complexity.

3.1 Prompt Engineering and Contextual Window (Recommended for most cases)

This is the most accessible and often powerful method. Instead of altering the model’s core weights, you provide specific instructions and context in each API call.

- System Prompt: Define the AI’s persona, role, and overarching guidelines.

- User Prompt: The actual query or task.

- Contextual Data: Inject relevant brand-specific information directly into the prompt (e.g., a relevant FAQ entry, a section of an employee handbook).

from openai import OpenAI

client = OpenAI(api_key="YOUR_OPENAI_API_KEY")

# Example of a system prompt establishing brand voice and role

system_message = {

"role": "system",

"content": "You are 'InnovateCo Support Bot', a helpful and friendly AI assistant for InnovateCo. "

"Always maintain a positive and professional tone. Refer to 'InnovateCo' products as 'our innovative solutions'. "

"If you don't know the answer, politely state that you cannot assist with that specific query and suggest visiting our knowledge base at innovateco.com/kb."

}

# Example of injecting relevant data (e.g., a specific FAQ entry)

product_faq_context = "FAQ: What is our 'Quantum Leap' software? Our Quantum Leap software is an AI-powered analytics platform designed to optimize supply chains, predict market trends, and reduce operational costs by up to 20% using proprietary machine learning algorithms."

user_query = "Tell me about your Quantum Leap software."

response = client.chat.completions.create(

model="gpt-4", # Or gpt-3.5-turbo, whichever is suitable

messages=[

system_message,

{"role": "user", "content": f"{product_faq_context}\n\n{user_query}"}

],

temperature=0.7 # Adjust for creativity vs. factual accuracy

)

print(response.choices[0].message.content)

Best for: Dynamic information, quick iterations, avoiding complex fine-tuning, adhering to brand voice and general customer service. This is often combined with Retrieval Augmented Generation (RAG) where an external system retrieves relevant documents and inserts them into the prompt.

3.2 Fine-tuning (More Advanced)

Fine-tuning involves further training a pre-trained model on your specific dataset. This allows the model to learn patterns, language nuances, and specific responses inherent in your data, effectively teaching it to speak your brand’s language more naturally.

- Data Format: Requires data in a specific JSONL format, typically as conversational exchanges (prompt-completion pairs or multi-turn dialogues).

- Cost & Complexity: More expensive and time-consuming than prompt engineering, requiring more data and technical expertise.

- Benefits: Can reduce prompt token usage, improve model consistency for specific tasks, and make the model less prone to “hallucinations” on your core topics.

{"messages": [{"role": "system", "content": "You are a friendly InnovateCo support agent."}, {"role": "user", "content": "How do I reset my password?"}, {"role": "assistant", "content": "To reset your password, please visit our password reset page at innovateco.com/password-reset. You'll need your registered email address."}]}

{"messages": [{"role": "system", "content": "You are a friendly InnovateCo support agent."}, {"role": "user", "content": "What is the warranty for the 'Alpha Processor'?"}, {"role": "assistant", "content": "All InnovateCo hardware, including the Alpha Processor, comes with a standard 2-year limited warranty. Details are available at innovateco.com/warranty."}]}

Best for: Deep domain-specific knowledge, mimicking specific response styles, generating high-volume niche content, or when prompt engineering becomes too verbose.

Step 4: Implement and Integrate Your Customized AI

Once you’ve chosen your approach and trained/configured your model, it’s time to put it into action.

4.1 API Integration

Most practical applications involve integrating ChatGPT via its API. This allows you to build custom interfaces or connect it to existing systems.

- Front-end Applications: Build a custom chat widget for your website.

- Internal Tools: Integrate with Slack, Microsoft Teams, or an internal knowledge portal.

- CRM/Support Systems: Connect to Zendesk, Salesforce, or Intercom to automate responses or assist agents.

4.2 User Interface Design (If applicable)

If creating a user-facing bot, design an intuitive and branded interface.

- Clear greeting messages.

- Options for common queries (buttons, quick replies).

- Easy access to human agents if the AI cannot help.

Step 5: Test, Evaluate, and Iterate

Customizing an AI is an ongoing process, not a one-time task.

5.1 Conduct Rigorous Testing

- Internal Testing: Have employees from different departments test the AI with a wide range of queries.

- User Testing (Pilot Program): Roll out the AI to a small group of friendly users or customers to gather real-world feedback.

5.2 Establish Evaluation Metrics

- Accuracy: How often does the AI provide correct information?

- Relevance: Is the information provided pertinent to the query?

- Tone & Style: Does it consistently adhere to your brand voice?

- Helpfulness: Do users find the AI helpful and efficient?

- Fall-back Rate: How often does the AI fail or need to escalate to a human?

5.3 Collect User Feedback

Implement mechanisms for users to rate responses (e.g., thumbs up/down, feedback forms) or provide direct comments.

5.4 Iterate and Refine

Use the feedback and evaluation metrics to continuously improve your AI. This might involve:

- Updating your training data with new information or corrections.

- Adjusting system prompts or contextual injection logic.

- Performing further fine-tuning runs with improved datasets.

- Modifying integration logic or UI elements.

Tips and Best Practices

- Start Small, Scale Up: Begin with a well-defined use case and expand gradually.

- Human in the Loop: Always have a clear escalation path to human agents for complex or sensitive issues.

- Monitor Performance: Regularly review AI interactions and performance metrics.

- Keep Data Fresh: Your brand and operations evolve; your AI’s knowledge base should too. Schedule regular data updates.

- Ethical AI: Prioritize fairness, transparency, and data privacy. Regularly audit for unintended biases.

- Transparency: Clearly label AI interactions so users know they are speaking with a bot.

- Leverage RAG (Retrieval Augmented Generation): For most enterprise use cases, combining prompt engineering with a robust RAG system (which retrieves context from your knowledge base) is more effective and flexible than pure fine-tuning.

Troubleshooting Common Issues

- AI Hallucinates / Gives Incorrect Information:

- Solution: Improve the quality and breadth of your training data (for fine-tuning) or the contextual information provided in prompts (for prompt engineering/RAG). Reduce the

temperatureparameter in API calls for more deterministic output. - Solution: Implement guardrails and fact-checking mechanisms. Train the AI to “say I don’t know” rather than fabricating answers.

- Solution: Improve the quality and breadth of your training data (for fine-tuning) or the contextual information provided in prompts (for prompt engineering/RAG). Reduce the

- AI Doesn’t Maintain Brand Voice:

- Solution: Refine your system prompt with more specific instructions regarding tone, vocabulary, and forbidden phrases. Include examples of desired responses in your fine-tuning data.

- AI is Repetitive or Generic:

- Solution: Increase the diversity of your training data. For prompt engineering, vary the wording of your contextual inputs. Increase the

temperatureslightly (but carefully) if answers are too formulaic.

- Solution: Increase the diversity of your training data. For prompt engineering, vary the wording of your contextual inputs. Increase the

- AI Misinterprets User Queries:

- Solution: Ensure your training data covers a wide range of phrasing for common queries. For complex queries, consider breaking them down or using intent recognition systems before passing to the LLM.

- Solution: Provide more explicit instructions in the system prompt on how to handle ambiguous queries (e.g., asking clarifying questions).

- Performance/Latency Issues:

- Solution: Optimize API calls to send only necessary data. Consider using a smaller, faster model (e.g.,

gpt-3.5-turbo) for simpler tasks. Implement caching for frequently asked questions.

- Solution: Optimize API calls to send only necessary data. Consider using a smaller, faster model (e.g.,

Conclusion and Key Takeaways

Transforming ChatGPT from a generic generalist into a brand-specific genius is a strategic investment that pays dividends in efficiency, consistency, and customer satisfaction. It’s a journey that demands meticulous data preparation, thoughtful customization, continuous testing, and a commitment to iteration.

The key takeaways are:

- Define First: Clear goals and use cases are non-negotiable foundations.

- Data is King: High-quality, brand-specific data is the fuel for intelligent customization. Cleanliness and relevance are paramount.

- Context is Power: For most applications, effective prompt engineering and Retrieval Augmented Generation (RAG) are your most potent tools, allowing you to inject dynamic, relevant information.

- Iterate Relentlessly: AI customization is not a set-it-and-forget-it project. Continuous monitoring, feedback, and refinement are crucial for long-term success.

- Ethical and Transparent: Always prioritize user trust by maintaining transparency about AI interaction and addressing ethical concerns.

By following this guide, your organization can harness the immense power of large language models, tailoring them to perfectly resonate with your brand’s identity and seamlessly integrate into your operational fabric, truly moving from generic to genius.