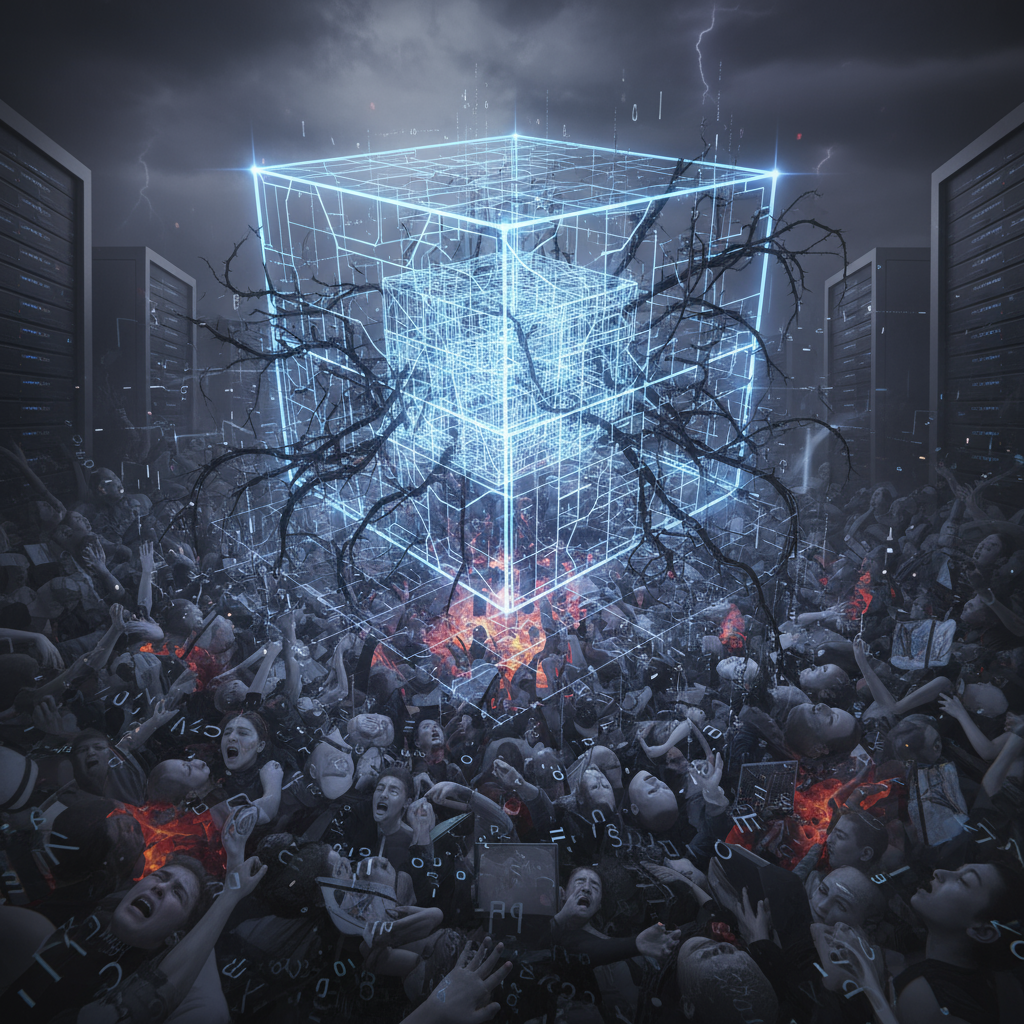

We’ve all been there: scrolling through our social media feeds, only to stumble upon a comment section that feels less like a discussion and more like a gladiatorial arena. The vitriol, the misinformation, the outright harassment — it’s a grim reality that has become synonymous with our online interactions. For years, the prevailing wisdom has been that to combat this rampant social media toxicity, we need to tweak the algorithms. If only we could re-engineer the code, surely the digital landscape would transform into a utopia of polite discourse and meaningful connection, right?

However, an increasingly vocal school of thought, highlighted by recent discussions, suggests a far more nuanced and sobering truth: messing with the algorithms alone won’t fix the fundamental problems. This isn’t just about lines of code; it’s about human nature, incentive structures, and the very design of these platforms. It’s time to peel back the layers and understand why focusing solely on algorithms is a Band-Aid solution to a gaping wound.

The Illusion of Algorithmic Control

The idea that algorithms are the sole orchestrator of online toxicity is an appealing one. It offers a clear villain and a seemingly straightforward solution. If the algorithm amplifies outrage, then surely an algorithm designed to suppress it will lead to civility. This perspective often overlooks the complex interplay between user behavior, platform design, and the inherent human biases that algorithms, by their nature, often learn to exploit.

Algorithms are, at their core, sophisticated pattern recognition systems. They observe what content engages users most and then prioritize similar content. Unfortunately, for better or worse, highly emotional, polarizing, and controversial content often generates significant engagement – clicks, shares, comments, even angry replies. When platforms prioritize engagement above all else, these algorithms can inadvertently create echo chambers and feedback loops that reward and amplify toxicity, not because they are inherently malicious, but because they are doing precisely what they were designed to do: maximize user attention.

Beyond the Code: Human Nature and Platform Design

The deepest roots of social media toxicity lie not in the algorithms themselves, but in the fertile ground upon which they operate: human psychology and the foundational design of the platforms. Consider these critical factors:

Anonymity and Disinhibition

The relative anonymity afforded by many online platforms can lead to the “online disinhibition effect.” People are more likely to say things they wouldn’t in person, unleashing aggressive or inappropriate comments without fear of immediate social repercussions. This anonymity chips away at the social contracts that typically govern our real-world interactions, making empathy and restraint less common.

Incentive Structures for Outrage

Many social media platforms are built on an attention economy. The more time you spend on the app, the more ads you see, and the more revenue the platform generates. Outrage, controversy, and conflict are powerful drivers of attention. This creates an implicit, and sometimes explicit, incentive for users to post inflammatory content and for platforms to surface it. Changing an algorithm to de-prioritize “toxic” content might inadvertently decrease engagement, a prospect many platforms are loath to embrace due to business models.

The “Us vs. Them” Mentality

Social media has become incredibly effective at segmenting communities and fostering an “us vs. them” mindset. Algorithms, by showing us more of what we already agree with, can exacerbate this tribalism. When we are constantly exposed to information that reinforces our existing viewpoints, and are rarely challenged by opposing opinions, it becomes easier to demonize “the other side” and engage in hostile online interactions. This isn’t solely the algorithm’s fault; it’s how humans often react to perceived threats to their in-group.

What Really Needs to Change?

If algorithms aren’t the magic bullet, then what solutions should we be pursuing? The answer is likely multifaceted and requires a re-evaluation of fundamental principles:

- Rethinking Business Models: As long as engagement at all costs reigns supreme, toxicity will flourish. Platforms need to explore business models that aren’t solely reliant on maximizing attention. Could subscription models, or models that reward positive engagement over rapid virality, be part of the solution?

- Prioritizing User Well-being: Platforms should shift their focus from simply “connecting the world” to actively promoting healthy and constructive interactions. This means investing in robust moderation, not just algorithmic filtering, but human oversight. It also means providing users with more granular control over their feeds and mental health resources.

- Empowering Users and Fostering Digital Literacy: Education plays a crucial role. Users need tools and knowledge to identify misinformation, disengage from toxic interactions, and understand the manipulative tactics often employed online. Promoting media literacy and critical thinking skills from a young age can build more resilient online communities.

- Designing for Empathy: Can platforms be designed in ways that subtly encourage empathy and understanding? Features that promote thoughtful rather than impulsive responses, or that highlight common ground, could make a difference. Reducing the ease of anonymous abuse and encouraging a sense of individual accountability could also be impactful.

- Regulation and Accountability: Governments and regulatory bodies have a role to play in holding platforms accountable for the societal impact of their designs and content moderation policies. This isn’t about censorship, but about establishing clear guidelines for content that promotes harm, hate speech, or incites violence.

The Path Forward

The journey to a less toxic social media landscape is complex and demands more than just tinkering with lines of code. It requires a fundamental shift in how we conceive of these platforms, moving beyond the illusion of algorithmic control to address the deeper human and systemic issues at play. This isn’t to say algorithms have no role; they can and should be optimized to reduce the amplification of harmful content. However, they are merely tools, and like any tool, their impact is determined by the hands that wield them and the environment in which they operate.

Ultimately, fostering healthier online spaces will require a collaborative effort from platform developers, policymakers, researchers, and, most importantly, us, the users. By acknowledging the limits of algorithmic fixes and embracing a more holistic approach, we can begin to build a digital world that truly connects, informs, and empowers, rather than divides and diminishes.