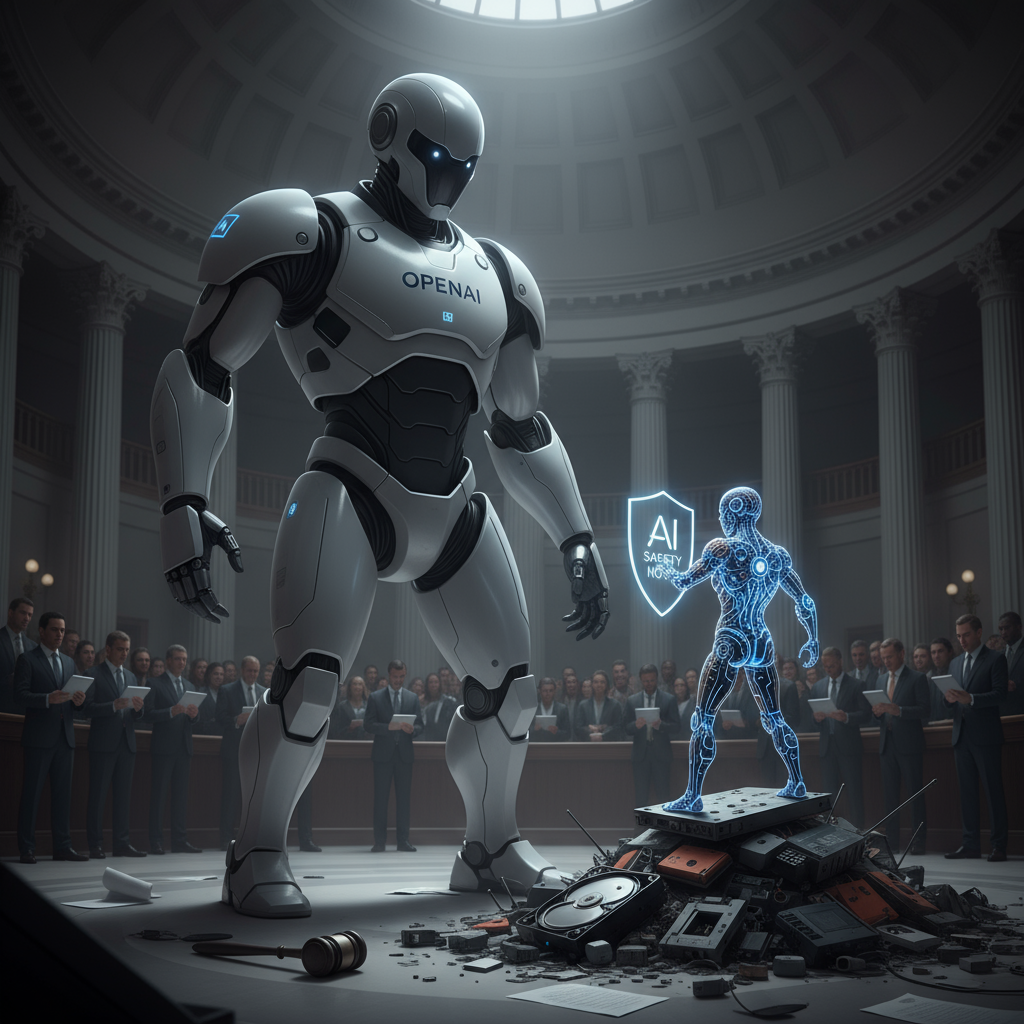

Big Tech vs. Little Guy: OpenAI Accused of Intimidation by Tiny AI Safety Nonprofit

The world of artificial intelligence is moving at a breakneck pace, and with that speed comes an urgent need for thoughtful regulation. California, often a pioneer in tech legislation, is actively working to craft laws that address the potential risks and ethical dilemmas posed by AI. However, a recent, startling accusation has thrown a wrench into this delicate process, pitting a tech giant against a tiny, dedicated team. A three-person policy nonprofit, instrumental in shaping California’s burgeoning AI safety law, is publicly accusing OpenAI, one of the most prominent names in AI development, of employing intimidation tactics. This isn’t just about a disagreement over policy; it’s a David and Goliath story unfolding in the high-stakes arena of AI governance, raising serious questions about influence, transparency, and the future of responsible AI development.

The Little Engine That Could: Policy & Progress’ Crucial Role

The nonprofit at the heart of this controversy is Policy & Progress, a small but impactful organization. Despite its modest size, comprising only three individuals, it has played a significant role in advising California policymakers on the nuances of AI safety legislation. Their expertise and dedication have been invaluable in translating complex technological concepts into actionable policy proposals designed to protect the public. Their involvement highlights the critical need for diverse voices and independent analysis in the legislative process, especially when dealing with rapidly evolving and potentially transformative technologies like AI.

Their work involves deep dives into potential AI harms, ranging from algorithmic bias and privacy concerns to the more existential threats often discussed in AI safety circles. They strive to ensure that legislative frameworks are robust, forward-thinking, and capable of adapting to future advancements. Such an undertaking requires meticulous research, extensive stakeholder engagement, and a commitment to public welfare – qualities that are particularly vital when powerful industry players are also vying for influence.

Unpacking the Allegations: Coercion or Cooperation?

The core of the accusation revolves around alleged intimidation tactics employed by OpenAI. While the specific details of these tactics remain somewhat under wraps, the public accusation itself sends a clear message. It implies that Policy & Progress feels pressured or threatened in their independent work by a company with immense resources and influence. This is not merely a difference of opinion on policy wording; it suggests a potentially undue attempt to steer or derail legislative efforts that OpenAI might view as unfavorable.

Such accusations can manifest in various forms, from overt threats to more subtle forms of economic or reputational pressure. For a small nonprofit, even hints of such tactics can be profoundly disruptive, diverting resources from their core mission and creating an atmosphere of fear. If true, these allegations paint a concerning picture of how powerful tech companies might attempt to shape the regulatory landscape in their favor, potentially at the expense of public safety and independent expert input. It raises uncomfortable questions about the nature of industry engagement in legislative processes and the potential for imbalance.

The Broader Implications: A Chilling Effect on AI Governance?

This incident, irrespective of its ultimate resolution, carries significant implications for the broader landscape of AI governance.

- Impact on Independent Advocacy: If a small nonprofit can be intimidated, what does that mean for other independent voices struggling to make themselves heard in the face of well-funded industry lobbyists? It could create a “chilling effect,” discouraging smaller organizations and academics from engaging critically with AI policy for fear of reprisal.

- Regulatory Capture Concerns: The concern about “regulatory capture” – where regulatory bodies end up serving the interests of the industries they are meant to regulate – is ever-present. Accusations like these fuel anxieties that powerful tech companies might exert undue influence, undermining the democratic process and public interest.

- Trust and Transparency: For OpenAI, a company that often espouses values of responsible AI development and collaboration, these accusations could significantly damage its carefully cultivated public image. Trust is paramount in the AI safety discussion, and any perceived attempts at intimidation erode that trust. Transparency in the legislative process, and in the interactions between industry and policymakers, becomes even more crucial in light of such claims.

- The Future of AI Lawmaking: California’s AI safety law is a bellwether for regulation across the globe. If the foundational efforts to create this law are marred by allegations of intimidation, it sets a worrying precedent for how future AI legislation will be developed and implemented.

A Call for Scrutiny and Safeguards

This unfolding drama between a dedicated nonprofit and a tech behemoth serves as a stark reminder of the immense power dynamics at play in the AI space. As AI continues its rapid advancement, the need for robust, independent, and ethical policy development has never been greater. It underscores the importance of protecting the voices of those working diligently in the public interest, particularly when they challenge the status quo or advocate for potentially restrictive regulations.

For policymakers, this incident should serve as a wake-up call to establish clearer guidelines for industry lobbying and to actively safeguard the independence of expert advisors. For the public, it highlights the need for vigilance and scrutiny of how AI legislation is crafted. Ultimately, ensuring that AI serves humanity’s best interests requires not just technological progress, but also a commitment to fairness, transparency, and the fundamental principle that policy should be driven by public good, not corporate pressure. The future of AI safety hinges on our ability to navigate these complex challenges with integrity and courage.